Nothing Premature Is Ever Good!

Most developers have heard the term “premature optimization.” What this means is that you shouldn’t focus on optimizing your code (for performance) until your project is winding down. The reason is simple: Spending hours optimizing a code to be optimally fast can instantly be wasted if that code needs to change, and if it does need to change, that change will be more difficult to make.

What’s less propagated is the idea of “premature refactoring.” It’s one of the best lessons I’ve learned as a developer over a long span of time and nixing early refactoring has been a tremendous boon to my enjoyment of coding.

A Case Study: Choo Choo!

Let’s consider a situation where you’re building a a Light Rail Monitoring System. (Nice gig if you can get it.) There’s obviously going to be a bunch of UI, but you’re going to start with the Landing screen. It has the following requirements:

- A list with the next 25 trains set to arrive at a station.

- A larger view that shows detailed information for any train you click on.

- Ability to mark a train as tracked so you can be alerted when it arrives.

- A train animation along the bottom for when a tracked train arrives safely.

- A working clock display.

In my younger days, I’d immediately start breaking this screen down into pieces. I’d separate the clock, train animation, detail view, and list into four components I’d assume would be reused. Now, there’s nothing disastrous about this approach, and if you are working it out this way then kudos to you for being a true software developer.

And what you might find strange is that I look at this list of requirements and my design will be to make one giant, monolithic screen with all of the logic included.

Why Not Componentize Now?

So, why is it that we don’t optimize early on in our code? It’s because we haven’t completely solved the problem yet, and optimizing early will likely be a waste of time. We also don’t know where our bottlenecks are and applying finite resources to problems that don’t exist yet would be wasteful.

But let’s not stop there. Let’s look at the Agile Methodology. In 2011, most developers are familiar with this approach, but may not be aware that it’s built on a fundamental philosophy similar to what we’ve discussed:

The longer you work on a project, the smarter you are about how to create it. Design upfront is less effective than design later in the project because you are wiser for having come that far.

With this in mind, you can better understand my seemingly odd approach to the Light Rail system.

Delaying Componentization

I have a lot of questions regarding the individual components. Let’s start with the train list…are the rows displayed the same on other screens? Will the rows be homogenous within the table? What sorts of actions need to occur when they are selected and should those actions be reusable? Will the styling change on different screens (i.e., colors, fonts, etc.)? Is the data source going to be the same?

A lot of variability here. And since I’m an Agile developer, I prefer to wait as long as possible before committing to what the solution should be. Therefore, I choose to implement the list entirely in my Landing screen until I know more about the screen that needs it next.

What about the clock? Surely that’s simple, right? You’d be surprised. Should this clock sync to the local machine time, or sync to a server? (This is always a HUGE question.) Do the colors need to be configurable? (I’ve had apps where clock fonts change based on the color palette of the screen you’re on.) Should it display in military time, and is that an app setting that should be honored globally? What other scenarios will this clock be used in?

Think about it. A clock component is handy for more than the top-right of your screen. Perhaps it’s used in the train detail views to show schedules arrival times? It can be displayed in different fonts and colors. It could be a running clock or a static clock. Do we know yet?

You Can Make a Better Component — Later

So when do I start refactoring? Well, first, let me emphasize a few points about what my approach is to start with. I’m not advocating writing crap code. I’m not advocating writing code that will be harder to separate later, you must always be *anticipating* what will be refactored. For example, if I need a timer that updates the clock and updates the train schedule, even if they occur simultaneously, I create two separate timers. The fact that they update at the same time is a happenstance.

What I’m doing is delaying the refactor. Not avoiding it.

OK, so when do I begin this much-ballyhooed refactor? The short answer is, when it feels right.

Let’s say I work on the Scheduling screen next and it also has a clock and a list of trains. I note that the clock indeed has swapped color schemes, with the digits going from black to white and background white to black. I investigate further with the client and confirm these will be the only two color schemes. At this point, I refactor the clock out of the Landing screen and make it configurable with an enumeration so other screens can share the color schemes.

Coders’ Block

When I was a little boy and started coding, it was so much fun. I wrote terrible code, but I had fun doing it. By the time I became a professional, I wrote more organized code, and everything was still OK.

It wasn’t until years later when I started dabbling in Design Patterns. It was necessary education, but it screwed me up. Suddenly, every time I wrote a line of code I was concerned if it was optimally written. I tried to extract reuse from everything. That began a cycle of “tortured programming” for me. Suddenly, writing any code was an exercise in frustration.

The problem was that I was trying to anticipate how every blob of code could be reused without having the necessary information of how that code would be reused. Delaying the refactor allowed me to focus on the needs of the now and not the imagined needs of the future.

Iron Chef America: Mozzarella!

And now for something completely different.

Some friends and I got together to have a cooking day. We made three new recipes. As part of it, I was feeling creative and took some footage to make an Iron Chef America parody. Here it is. :-)

I used iMovie to stitch everything together. The challenge, obviously, is that it’s a lip-synch. When I didn’t get a perfectly accurate capture of a bit of dialogue, I had to do some clip splitting and speed things up in places. I’m pretty happy with the results.

So, only two clips in the whole movie include their audio. Can you identify them? :-)

Comments Never Get Out of Date

This blog is a response to Riyad’s assertion that comments are a waste of time. It’s definitely worth a read, but the gist of it is that code documents itself and that comments are more likely to fail than help. It’s sometimes true, but so is just about everything. :-) Reality can get in the way, though.

In every programming class I took in college and just about every language book I’ve read, one of the first things you learn is how to create comments in your code. In fact, in those college classes, if you dared hand in code without comments, you got dinged severely with “Explain what this does!!!1!!1!one”

Riyad Mammadov makes an excellent assertion that comments can make our code worse. He echos the words of Uncle Bob Martin, famed software author and surprisingly rabid Tea Party fanatic, that comments quickly become outdated when the code below them changes. I agree with this and I comment far less often than I used to.

Unfortunately for all of us, that doesn’t mean comments are obsolete. I’d like to take Riyad’s key argument and reverse it on him if you please.

What his key argument is is that comments would be great if (A) they changed along with the code they refer to and (B) if the developer had writing skills. I’d say both of those are often no’s. However, not leaving comments equally assumes (A) the next developer that comes along understands the original intent of the code and (B) that developer has naming skills.

What I’m saying is that encouraging a developer to skip the comments in lieu of writing good code will likely result in that developer gladly not writing the comments but thinking(mistakingly) that they are writing good code to make up for it.

Sound familiar? It’s the same reason many converts to the Agile Methodology are so happy to hear they can stop writing documentation, and do, all while both their code and the shipping product continue to look just as they did before. So much for that.

Case In Point

I’ve never met a developer who’s as good as they say. INCLUDING ME. I don’t believe you should comment every line or group of lines of code if the code is obvious. But, if you’re writing a blurb of algorithmic logic, leave a note on what it’s doing, and perhaps the math behind it, to ease the next person’s load. An example:

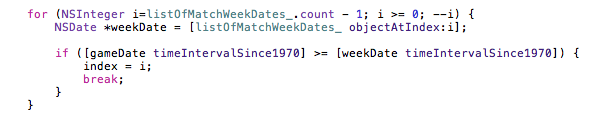

Quick, what does this code do? Maybe you can figure it out easily, maybe not. I’d like to think that a few seconds’ glance would tell you:

- The loop iteration is backwards because we’re searching backwards.

- When we find what we’re looking for, we capture a reference and end the loop.

- We’re doing a date comparison of some sort.

I don’t think the date comparison is inherently complex. However, I think this sort of code segment can make quite a few developers stop and look — for a while. As you read this code, did you find yourself trying to unravel the date comparison? Did your mind silently work it out…

OK, the game date is compared to the week start date, and if the game date is greater than the week start date…uhhh, it’s coming after it…and we’re looping backward, so it meeeeeans it wasn’t after it previously. So, now it is….wait, isn’t it always greater than the start week? Oh, right, it’s not greater than the weeks after. OK, I got it.

It’s understandable. The backwards looping along with the date comparison being greater than is a little weird to digest. It’s the same reason we can have a little bit of trouble reading Ruby code that uses unless rather than if. We like to read left to right, we like our logic positive, and we like to ordering things chronologically. And that’s why when I see unless in code I just say if not in my head. :-)

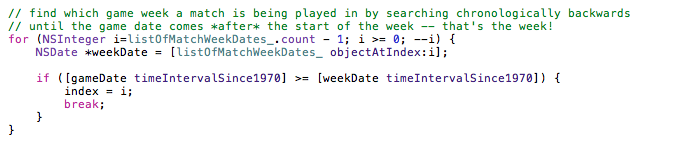

Now, here’s the same code with a simple comment:

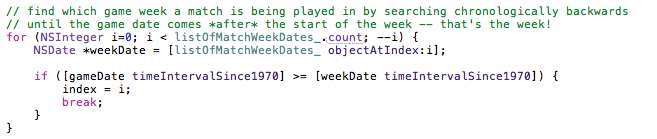

Simple question…if you were reading this code and came across this comment, wouldn’t it have saved you some mind time? Now, there’s a chance the comment may not have been accurate after a period of time. Perhaps someone came along and changed the logic but didn’t update the comment. But, what if the code had then become this?

Uh oh. After a quick inspection, the comment is out of date because that code is clearly searching chronologically. But wait! The loop is still decrementing! Because the comment gave you some historical insight, you can quickly deduce that someone tried to change the loop order but bungled the iteration (and the logic). That comment being out of date was still useful!

No, this may not always be the case. But let’s be serious. We always need to write clean, well-named code. And comments can’t hurt. Assuming a comment speaks for the code accurately is as silly as assuming a function does exactly what its name says it does.

I’ve never met a comment I didn’t appreciate when it adorned some tricky logic. Accurate or not, it will give you a clue as to what you’re looking at. And if you think your code is so hot it doesn’t need commentary, well, it’s not your opinion that counts here.

Coding Standards Are a Farce!

As young companies prosper and development teams grow, the inevitable conversation always becomes about agreeing on a set of coding standards that every developer must rigidly follow. We’ve all been there for the same tired meeting.

The Meeting

“Every one of us most write our code the same way to be successful.”

“But we’ve had success. Why should I be forced to place my curly braces on their own lines?”

“Because it will make our code look clean! And you have to preface your instance variables with m_, too!”

“Why? My IDE already identifies fields and gives them their own syntax color, too.”

“Not anymore, we’re not allowing the use of Eclipse anymore, it’s too slow to use!”

“If I can’t use Eclipse, then you can’t start your interface names with the letter I…”

…and so on, and so forth…

I’ve never quite gotten it. No company I’ve ever worked for started on Day #1 with a set of coding standards. The initial team usually just happily hacks away until either (A) the company dies or (B) the company thrives. When the latter happens, they hire more developers and then the coding standard talk comes about.

But why? I mean, sure, coding standards can make your source code look more homogenous, like it may have been written by a single developer. But, it wasn’t. Now, you’re forcing all your developers to code in one style that nobody (but the implementor) will like. They won’t be as happy. They won’t be as free. Their muscle memories will continue to type the way they always did, and continue to do at home, and they’ll constantly have to correct mistakes. The lead developer will see the offenses in the source repository and send obnoxious emails that say, “Uhh, yeah-h-h-h, you didn’t place all your variable definitions on their own line in two of your files. We went over this. Fix it!”

On top of all that, the code will never be homogenous because you can never standardize on talent and ideas. Solutions will vary in complexity, and the reader will notice.

Coding standards are a farce! Let them die.

If Not Coding Standards, Then What?

I’ll tell you what. If you want your developers to form into a cohesive unit, don’t push coding standards on them. Let them do what comes naturally, don’t disturb that force. What you should do is focus on education and teaching by example.

Recently, I joined Double Encore in Denver and, as expected, I met up with a lot of new coding styles that I wasn’t familiar with. One of our Dev Leads, Dave, did a presentation on Memory Management in Objective-C and while I disagreed with a couple things, there were some new things I found simply bizarre. One was to avoid using XCode templates when writing code.

What?? No templates??

Yes. The point of it was that our view controllers tend to get filled with bunches of empty functions that are filled with Apple commentary on what to do. We have stretches of them that go (properly) unimplemented. It bloats up our source and can make it hard to easily pick out the good stuff when reviewing the code.

(On top of that, the templates don’t call [super loadView] which leads to all kinds of crashes. But, I’ve outlined that and other grievances in 10 Things Apple Could Do to Make iPhone Development Perfect.)

When he said to stop using templates, I thought it was ludicrous. The next day, I gave it a try practically out of spite. This was stupid. I was supposed to create UITableViewDataSource objects without the template? Grotesque. Well, what I found was that it actually is pretty easy. The XCode autocomplete successfully fills in about 95% of the methods you need and, sure enough, you find that your source files are easier to read.

So now I don’t use templates anymore.

Now, did I need to have a coding standard imposed on me? Not at all. I was perfectly happy and willing to use templates. I tried it, and I liked it. I was sold. I believed. I wasn’t forced to do it because “it’s better this way, trust me,” I do it now because I really believe it to be better. What a world of difference!

Code Reading

If I started a development company today with a dozen developers working for me, I would want to get everyone doing the important stuff. Unit testing. Following a methodology as a team. Improving debugging techniques. Learning the language completely. Mastering the platform. Learning new third-party tools that make us more productive and ease our workload.

I wouldn’t teach it myself, I’d have the developers teach themselves. Slicing out some times each week for a presentation is a decent start, but it’s going to feel authoritarian and forced. Were you ever in a brownbag about a new application framework you didn’t want to use but your manager was all excited about it and you knew you were doomed to its standardization? What I’d really prioritize is actively encouraging my developers to send code to one another to review.

Code reading is the single best activity any developer can take part in. Much like book learning, the advantage is that you’re learning things you never thought to. Think about it, when you run into a problem you do a search and likely end up googling your way out of it. You got the code segment you were looking for, plugged it in, and your feature is now complete. But, when you do some code reading on an open source project or yours peers’ work, you are exposed to all manner of techniques you didn’t even know existed.

A fellow consultant of mine learned just a couple weeks ago that you can send messages to nil without a crash. In fact, it’s encouraged. Now, in almost every other language, this is something you spend a lot of time avoiding! In his case, he was a long-time Java developer who grew up on avoiding null references. Not only did he not know about this language difference, he never would have thought to even look it up! It never occurred to him it would be possible! Now, if he had read an Objective-C book cover to cover, there’s no doubt he would have learned that. That’s the difference in both book reading and code reading, you learn outside the box.

Try it. Take 10 minutes, and read as much source as you can of the WordPress iPhone App. Don’t try to understand how the whole app works together, just read individual classes. Read the code. I guarantee you will learn no less than 5 new things in those 10 minutes — probably more!

And when a team learns together, they code together. They will begin to share not only coding styles, but coding techniques, and by not only learning the best way to do things, but to adopt them without force, it becomes natural and empowering.

Because. Quite simply. If you have to force someone to code a certain way, it’s probably not important.

UPDATE: Based on some comments, keep in mind that not having a set of coding standards does not mean the apocalypse of horrible programmers are now free to reign terror on your codebase. Physical beatings are also a teaching tool. Also, some form of talent is required. No monkey coders.

Using MotoBlur as a Dev Phone

At my office, I’m using a new Motorola MotoBlur as a dev phone for Android development. Wanted to share a little wrinkle in setting it up.

As is common with Android, going to Settings -> Development presents you with an option to use your phone as a dev phone by linking it up to your laptop via USB. However, on MotoBlur, this isn’t enough. When you use adb to find connected devices, your MotoBlur won’t appear.

To get things working, drag down from the status bar on the phone and select “USB Connection”. You will see your syncing options. Select “None”. Now you can hook up your phone via USB and adb will find it.

That is all.

I've been writing mobile apps professionally for 10 years: Blackberry, Java, ME, iPhone, Android. I've gotten dozens of apps into the various App Stores for dozens of clients, big and small.

I've been writing mobile apps professionally for 10 years: Blackberry, Java, ME, iPhone, Android. I've gotten dozens of apps into the various App Stores for dozens of clients, big and small.